The Dawning of the Age

of the Metaverse

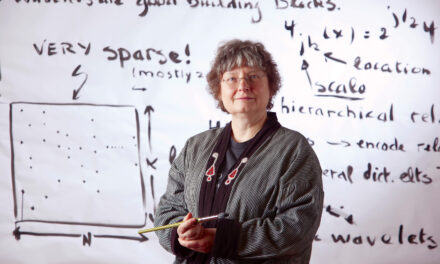

As I slid the headset’s clear lenses down over my eyes, the plastic skull on the table in front of me jumped into technicolor. Several inches beneath its smooth, white cranium, I could see vibrantly colored neurological structures waiting to be punctured by the medical instrument I held in my quivering hand.

Lining up the long, thin catheter with a small hole in the back of the skull, I slowly began pushing its tip downward. Numbers floating off to my right told me exactly how far the instrument was from reaching its goal. My target was highlighted on the right ventricle—one of two large, fluid-filled structures deep inside the brain that keep it buoyant and cushioned. As I carefully adjusted my angle of attack, a lightsaber-like beam extended through the current trajectory of my catheter, burning red when its path was off kilter and glowing green when it was on target.

It’s a good thing I’m not a neurosurgeon and that this was only an augmented reality demonstration, or the patient’s brain would have been stirred like a bowl of ramen. Even the slightest twitch or tremble turned the lightsaber to the dark side, indicating it was off track.

“We had a group of medical students in here a few weeks ago who were making a game of it,” said Maria Gorlatova, the Nortel Networks Assistant Professor of Electrical and Computer Engineering at Duke. “We 3D printed some molds to create a Jell-O–like filling to make it feel more realistic. And even for them it’s a real challenge to get the catheter placed correctly.”

The system, called NeuroLens, is the brain child of PhD student Sarah Eom, and is one of many AR projects underway in Gorlatova’s lab. She hopes the tool will one day help neurosurgeons with a relatively common but delicate procedure: placing catheters into the brain’s ventricles to drain excess fluid. And not just in practice, but with real patients.

Surgical procedures and other medical uses are one of the applications furthest along the development track for augmented reality. While the tasks can be intricate and delicate, they have the advantage of taking place on a stationary object, where a group of cameras can surround the table and keep incredibly close watch on every miniscule movement while relaying the data via a steady computer connection.

But these advantages evaporate even in the relatively controlled environment of one’s own home, much less the streets of an urban environment. That makes the true potential of the Metaverse possible only in Hollywood depictions, for the time being. We can imagine hungry people walking down a street, seeing the names, menus and reviews of restaurants hovering over buildings as they pass. Directions are overlaid on the sidewalks, while potential hazards of fast-moving bikes and cars are highlighted in red. People come home to lights automatically optimized to the task at hand, while cabinets, appliances and shelves provide useful information about their current contents.

These visions of the future may currently be relegated to shows like Black Mirror, but they’re actually not as far away as one might think. “The graphic displays and processing needed for this level of augmented reality interaction is actually already pretty decent. That’s not the bottleneck,” Gorlatova said. “We just need to develop the supporting infrastructure in terms of mobile algorithms, local processing power and more efficient AI.”

Enabling the visionary future of the Metaverse requires research projects of many levels, ranging from AI-accelerating hardware to localized computing networks that can tackle complex software in the blink of an eye. To discover how researchers are approaching these challenges, and perhaps even to get a glimpse of tomorrow’s Metaverse, one need look no further than Duke ECE.

Undergraduate engineering student Seijung Kim is guided through a tricky brain catheterization procedure with the help of an AR experience designed by PhD student Sarah Eom.

Hai Li, Jeffrey Krolik and Yiran Chen belong to the leadership team behind Athena, the National Science Foundation AI Institute for Edge Computing Leveraging Next Generation Networks.

Research

Grounding Control from the Cloud

One of the largest hurdles to clear before augmented reality can be integrated into our daily routines is bringing the computational power required to run these complex programs out of the cloud. The AI that powers even today’s simpler Metaverse interactions, like telling a smart speaker to turn on a light, is currently too complicated to run anywhere except on massive servers several routers away from the end user. While the resulting response may only take a second or two, that’s far too slow to enable the real-time environment that researchers envision.

One approach to shaving this lag time down to acceptable levels is called “edge computing.” This can either mean making use of local processing power waiting dormant for instructions or building more localized data processing centers that only handle nearby requests.

The latter is the focal point of the Duke-led, five-year, $20 million center called the AI Institute for Edge Computing Leveraging Next-generation Networks, or Athena for short. Its goal is to support the development of advanced mobile networks and a broad matrix of local datacenters to improve the speed, security and accessibility of data processing on a national scale.

“Right now, a small handful of large carriers provide the wireless networks,” said Yiran Chen, professor of ECE and director of Athena. “They sell the devices and they offer the cloud computing services. In the future Athena envisions, large datacenters will be augmented by thousands of small, local datacenters that are much closer to the edge.”

Through a collaboration with the Town of Cary, North Carolina, and small edge equipment vendors and service providers, Athena is already working to demonstrate how the developed edge computing system could work in a local community.

Chen also has a grant with collaborators at George Mason University to model how augmented reality systems are configured to distribute their graphic and data processing tasks between the various microprocessors on the device with the aim of reducing overall computational loads. Similarly, Gorlatova’s PhD student Lin Duan is working to make the algorithms involved more efficient by figuring out how to best supplement large public datasets with small, local datasets to improve the performance of Metaverse-focused AI.

Beyond improving algorithmic performance, Duke researchers are also working to make the hardware installed on augmented reality devices better as well. One example is creating an entirely new type of electronic component called a “memsister” that features elements of memory as well as simple binary processing. If deployed in the right way, these kinds of components could create “neuromorphic” hardware that operates more like the human brain than a traditional microprocessor, which could make certain AI tasks like pattern recognition much more efficient.

This line of research is currently being pursued by Helen Li, the Clare Boothe Luce Professor of Electrical and Computer Engineering with a joint appointment in computer science, and new ECE professor Tania Roy. “The brain isn’t great at calculating large numbers like a computer, but it’s very good at pattern recognition,” Roy said. “While we don’t fully understand how the brain works, we can model the basic tructure of neurons and synapses to make them better at pattern recognition. For example, the more times a memristor sees the same input, the stronger its response, while inputs that don’t repeat become forgotten.”

As these efforts mature and are applied to real-world devices, they should make energy-and processing-greedy algorithms better suited for the small headset and handheld devices demanded by tomorrow’s Metaverse. And the more tasks that can be kept to local datacenters or the devices themselves, the more security and privacy concerns can be addressed.

Research

Keeping Data Private in the Metaverse

Another challenge facing the widespread adoption of Metaverse technology is concerns over security and privacy. To truly function seamlessly out in the real world, augmented reality devices have to interact with many other devices and processing centers. And complex AI algorithms demand large, complex datasets to learn from, which usually means users sharing their data with the developers. Aside from the increase in processing speeds, data privacy is another advantage of edge computing and focus of the Athena center.

“Keeping data close—as close as the palm of your hand—is more secure because you’re not sharing that information with a huge datacenter. It also delivers more personalized results,” said Jeffrey Krolik, ECE professor and managing director of Athena.

Consider a wearable sleep monitor like a smart watch. That device might collect biometric data alongside lifestyle data, like how much caffeine you normally consume, and combine it with environmental data, like the temperature of your house. While it might be worthwhile to amalgamate a small number of users’ data streams to look for commonalities—is nearby construction disrupting the sleep of an entire neighborhood?—there’s probably not much utility in combining the data of a North Carolinian with that of Texan at a server farm a thousand miles away.

Gorlatova and Li also have projects focused on privacy underway. For Gorlatova, it’s creating a set of “virtual eyes” that developers can use to train their Metaverse AI programs. Where a person is looking during an augmented reality session is crucial information that can greatly enhance the experience. But it can also reveal personal information, meaning users might not want to share their eye movement data with companies.

“Where you’re prioritizing your vision says a lot about you as a person,” Gorlatova said. “It can inadvertently reveal sexual and racial biases, interests that we don’t want others to know about, and information that we may not even know about ourselves.”

Gorlatova and her students created “EyeSyn,” which replicates how human eyes view the world in certain scenarios well enough for companies to use it as a baseline to train new Metaverse platforms and software. With a basic level of competency, commercial software can then achieve even better results by personalizing its algorithms after interacting with specific users.

Also in the realm of training AI while protecting privacy is Li’s “LEARNER” platform. Developed with colleagues at the University of Pittsburg, the platform allows multiple organizations to share private data securely to better train machine learning models. Originally targeted at patient data and health care algorithms, the federated approach could easily be a boon to any AI company worried about protecting user data.

The system uses a single AI model housed in a central cloud that is provided to users in multiple locations. Each location runs the AI model with its own data and produces a new set of weight parameters, which is in turn sent back to the cloud. The central AI model then uses all of the information to develop a single updated algorithm. The process is repeated until the AI model is as good as it can get.

Because only the weight parameters and not the actual data is being shared with the cloud, the technique sidesteps any concerns about data privacy, but the final trained model still represents data from all users involved.

“The original information remains hidden on local computers,” explained Li. “For a large model, the process typically requires about 50-100 rounds of training between the local entities and the cloud. It sounds like it might take a long time, but in fact only takes a matter of hours.”

Taking Advantage of Technical Advances

With all of this work making strides at Duke as well as countless other institutions around the world, it’s easy to believe the Metaverse of the movies isn’t so far in the future. It’s even easier to believe if you visit Gorlatova’s lab.

Aside from the brain-puncturing augmented reality project, she’s also working with ophthalmologists at Duke Medical Center on a system to help guide doctors in retinal laser therapy, a common treatment for various retinal issues like proliferative diabetic retinopathy and retinal tears. The group is currently creating the foundational augmented reality framework for visualizing a patient-specific retina model by labeling ocular landmarks and sites that require treatment with the end goal of guiding doctors in real-time during procedures.

In another corner of her lab, PhD candidate Tim Scargill is working to make an augmented reality system that changes the environment it’s operating in to improve performance. After scanning a table with a black and white checkerboard on it with a smart phone, the augmented reality system kicks in and tops the checkerboard’s image with a virtual fox. But when Scargill swaps the checkerboard out for a piece of paper filled with fine-point paragraphs, the system has more difficulty determining where exactly the fox should be placed. To compensate, it automatically brightens a light bulb above, and the fox appears soon after right where it’s meant to be.

The fox project highlights one of the larger challenges yet to be fully surmounted by the augmented reality community—accurately viewing and placing objects in the real world. Another project Gorlatova is pursuing in collaboration with Neil Gong, assistant professor of ECE, involves making these visual systems more robust.

Augmented reality systems tend to “see” the world as point clouds consisting of thousands of individual datapoints. Rather than simply comparing an entire object’s point cloud to an existing database to figure out what it is, their approach instead uses only a random fraction of the points at any given time. By choosing random points to determine what an object is thousands of times and picking the answer that comes up most, the system is less likely to make a mistake. It’s also less likely to be able to be fooled by an attack that adds datapoints to the cloud to create confusion.

“My laboratory is focused on improving augmented reality’s spatial awareness of where things are, semantic awareness of what people are trying to do, and user context awareness of how people tend to behave in certain situations,” Gorlatova said. “Edge computing is absolutely one of the key enablers of these new capabilities, and of course privacy is a topic that absolutely needs to be addressed so that the Metaverse doesn’t grow into some dystopian nightmare. Instead, it can become the place we’ve been dreaming about.”